Huffman coding

Huffman coding is an algorithm for the lossless compression of files based on the frequency of occurrence of a symbol in the file that is being compressed. In any file, certain characters are used more than others. Using binary representation, the number of bits required to represent each character depends upon the number of characters that have to be represented. Using one bit we

can represent two characters, i.e., 0 represents the first character and l represents the second character. Using two bits we can represent four characters, and so on. Unlike ASCII code, which is a fixed-length code using seven bits per character, Huffman compression is a variable-length coding system that assigns smaller codes for more frequently used characters and larger codes

for less frequently used characters in order to reduce the size of files being compressed and transferred.

For example, in a file with the following data: ‘XXXXXXYYYYZZ. The frequency of "X" is 6, the frequency of "Y" is 4, and the frequency of "Z" is 2. If each character is represented using a fixed-length code of two bits, then the number of bits required to store this file would be 24, i.e., (2 x 6) + (2): 4) + (2): 2] = 24. If the above data were compressed using Huffman compression, the more frequently occurring numbers would be represented by smaller bits, such as X by the code 0 (1 bit), Y by the code 10 (2 bits), and Z by the code 11 (2 bits}, the size of the file becomes 18, i.e., (h 6) + [2 x 4] + (2 x 2) = 18. In this example, more frequently occurring characters are

assigned smaller codes, resulting in a smaller number of bits in the final compressed file.

Huffman compression was named after its discoverer, David Huffman.

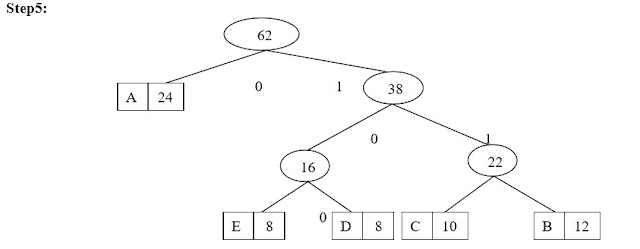

To generate Huffman codes, we should create a binary tree of nodes. Initially, all nodes are leaf nodes, which contain the symbol itself, and the weight (frequency of appearance) of the symbol. As a common convention, bit '0' represents following the left child, and a bit '1' represents following the right child. A finished tree has up to n leaf nodes and n — l. internal nodes. A Huffman tree that omits unused symbols produces the most optimal code lengths. The process essentially begins with the leaf nodes containing the probabilities of the symbol they represent, then a new node whose children are the 2 nodes with the smallest probability is created, such that the new node's probability is equal to the sum of the children's probability. With the previous 2 nodes merged into one node and with the new 11ode being now considered, the procedure is repeated until only one node remains in the Huffman tree. The simplest construction algorithm uses a priority queue where the 11ode with the lowest probability is given the highest priority:

Example

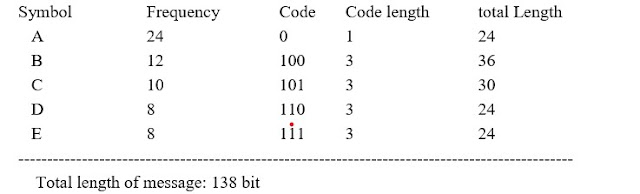

The following example is based on a data source using a set of five different symbols The

symbol's frequencies are:

Symbol Frequency

A 24

B 12

C 10

D 5

E 8

----> total 186 bits (with 3 bits per code word)

Algorithm

A greedy algorithm can construct Huffman code that is optimal prefix codes. A tree corresponding to optimal codes is constructed in a bottom-up manner starting from the |C| leaves and |C|-1 merging operations. Use priority queue Q to keep nodes ordered by frequency. Here the priority queue we considered is a binary heap.

HuffmanAlgo(C)

{

n = | C | ;

Q = C1

For(i = 1; i <= n - 1; i++)

{

2 = Allocate - Node();

x = Extract - Min(Q);

y = Extract - Min(Q);

left(z) = x;

right(z) = y;

f(z) = f(x) + (y);

Insert(Q, 2);

}

}

Analysis

We can use BuildHeap(C] to create a priority queue that takes O(11) time. Inside the for loop the expensive operations can be done in Oflogn) time. Since operations inside for loop execute for n-1 time total running time of the Huffman algorithm is O(11logn).

Comments

Post a Comment

Subscribe Us and Thanks for visiting blog.